Table of contents

What is MLOps? Machine learning has become a primary driver of competitive advantage, yet deploying models at scale remains one of the biggest challenges for enterprises. Many projects stall after proof of concept, not because the algorithms fail, but because the operational foundation is missing. MLOps best practices address this gap by applying the same discipline and reliability to model development and deployment that you expect from any mission critical system.

By 2025, the MLOps pipeline will have transitioned from a technical consideration to a boardroom priority. By following ML best practice and machine learning best practices, including MLOps for deep learning, enterprises can ensure their models transition seamlessly from experimentation to production with scalability, governance, and a positive business impact.

- Faster value capture by reducing the time between AI experiments and real business impact.

- Operational resilience by ensuring models adapt to changes in data and market conditions without disruption.

- Regulatory confidence by embedding compliance, auditability, and ethical safeguards from the start.

- Scalable growth by creating repeatable processes that work across teams, regions, and product lines.

Organisations that treat MLOps solutions as part of their core infrastructure will move ahead of those that see it as an add on to data science. Success is not about putting models into production but about keeping them accurate, reliable, and trusted at scale. This is what turns AI from a one off project into a lasting business capability.

Why MLOps Matters in 2025

AI models now run core processes, from credit scoring to supply chain forecasting. When they fail, the business feels it instantly. MLOps best practices ensure these models transition from prototype to production quickly, remain accurate as data evolves, and meet compliance requirements without compromising delivery speed.

Organizations that build a strong MLOps pipeline and adopt machine learning best practices deploy faster, scale more easily, and maintain trust with both customers and regulators. In 2025, the ability to operationalize AI, particularly with MLOps for deep learning, is a defining factor that separates market leaders from those struggling to keep up. The difference lies in executing every ML best practice with precision and scale.

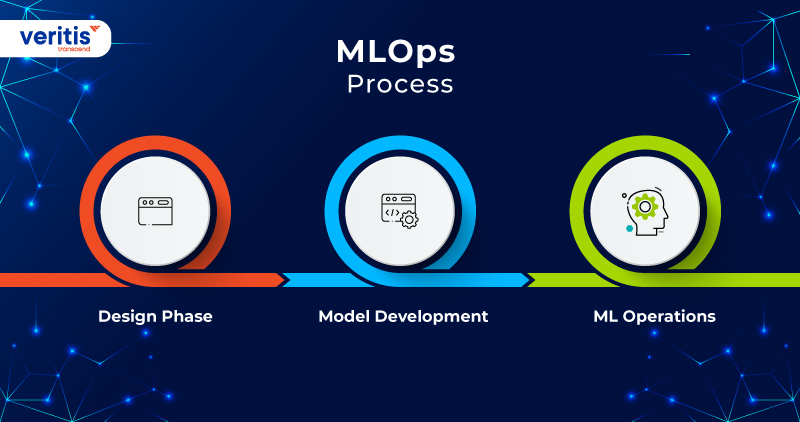

MLOps Process

Think of the MLOps process as a production line for intelligence. Each stage serves a purpose, and skipping one will cost you later.

1) Design Phase

This is where intent becomes a plan. Before a single model is trained, the business case is locked in, the data field is mapped, and the success criteria are agreed upon. It is the stage where you decide not what to build but why it matters.

What Happens Here:

- Choose the problem worth solving

- Prioritise use cases that can deliver measurable value

- Verify that the correct data exists and meets quality standards

- Set the performance and compliance bar that the model must clear

2) Model Development

Here, ideas turn into working models. Data engineers prepare clean, relevant datasets. Data scientists test algorithms and features. The best candidates are tuned and tested until they can meet real world demands, not lab benchmarks.

What Happens Here:

- Prepare and structure data for training

- Experiment with multiple algorithms

- Validate performance on unseen datasets

- Record results so they can be replicated and improved

3) ML Operations

The model leaves the lab and joins the business. It is deployed, monitored, and maintained like any other mission critical system. Continuous integration, training, and deployment enable seamless adaptation without disruption. Drift is detected early, and retraining is triggered automatically.

What Happens Here:

- Deploy into production environments

- Monitor for accuracy, latency, and unexpected behaviour

- Manage versions for quick rollback if needed

- Automate retraining when data or performance shifts

Useful Link: What is MLOps? Why MLOps and How to Implement It

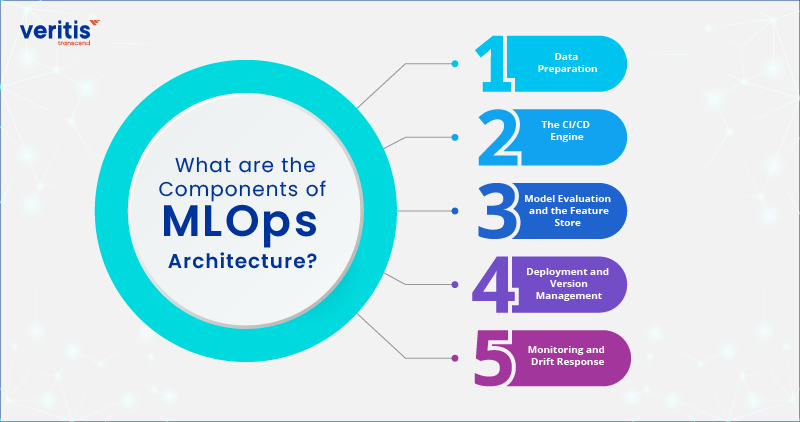

What Are the Components of MLOps Architecture?

A strong MLOps architecture works like a high performing team. Every role is clear, every handoff is smooth, and nothing falls through the cracks. This is the outcome of applying MLOps best practices across the entire pipeline, aligning every stage from model training to monitoring. Whether you’re scaling traditional models or deploying MLOps for deep learning, success depends on executing each ML best practice with clarity and precision. Here is how the key pieces fit together.

1) Data Preparation

Models are only as good as the data that feeds them. The architecture begins with systems that pull data from multiple sources, automatically check its quality, and shape it into a training ready form. By the time data reaches a model, it has already passed rigorous checks for accuracy and relevance.

What This Delivers:

- Cleaner inputs for better predictions

- Early detection of bad or incomplete data

- Features engineered for maximum predictive power

2) The CI/CD Engine

This is the automated pipeline that turns experiments into deployable models. Code, data, and configurations all travel through the same integration and delivery path. Tests and approvals occur along the way, ensuring only production ready assets are approved.

What This Delivers:

- Faster deployment with fewer human bottlenecks

- Consistency between development and production environments

- Built in quality control before release

3) Model Evaluation and the Feature Store

Before a model is cleared for launch, it goes through rigorous evaluation. If it passes, the features it relies on are stored in a central repository, allowing future models to reuse proven building blocks instead of starting from scratch.

What This Delivers:

- Models that meet performance and compliance thresholds

- Reduced duplication of work

- A shared library of trusted features

4) Deployment and Version Management

Once a model is live, it becomes part of the business workflow. Version management ensures every model in production can be traced back to its source and replaced or rolled back without guesswork.

What This Delivers:

- Confidence in knowing exactly which version is running

- Ability to respond instantly to failures or performance drops

- Clear lineage for auditing and troubleshooting

5) Monitoring and Drift Response

Even the best models degrade over time as data shifts. Automated monitoring tracks accuracy, latency, and data patterns in real time. If performance dips or drift is detected, retraining can be triggered automatically.

What This Delivers:

- Early warnings before problems affect the business

- Automatic adaptation to new data

- Sustained model performance over the long term

When these components work together, MLOps stops being a technical process and becomes an operational advantage. The architecture doesn’t just support models — it supports decisions, revenue, and resilience across the organisation.

Useful Link: Successful Digital Transformation: CEO’s Path to Digital Transformation Success

MLOps in Regulated Industries

In regulated sectors, trust is not earned through accuracy; it is enforced through law. Finance, healthcare, and government operate under rules that demand transparency, auditability, and control over every model in production. MLOps best practices form the operational backbone that enables this level of governance.

A well structured MLOps pipeline ensures models comply with regulations while maintaining optimal performance. By embedding machine learning best practices and leveraging MLOps for deep learning, organizations can meet regulatory demands without sacrificing agility. It’s the execution of every ML best practice that turns compliance into a competitive advantage.

1) Finance

Banks and insurers face strict oversight regarding credit scoring, anti money laundering models, fraud detection, and trading algorithms. MLOps pipelines can log every training run, store every version, and maintain data lineage down to the transaction level. This means if a regulator asks why a loan was declined, the bank can show exactly which model was used, what data it saw, and how it reached its decision.

2) Healthcare

Clinical AI systems, from diagnostic imaging to treatment recommendations, must comply with HIPAA in the US, the GDPR in Europe, and equivalent laws elsewhere. MLOps ensures patient data is anonymized before training, maintains model versions for medical audits, and triggers retraining when new clinical guidelines or updated datasets become available.

3) Government

Public agencies use models for benefits eligibility, tax compliance, and risk assessment. These decisions directly affect citizens, so fairness and explainability are non negotiable. MLOps enforces bias checks as part of the deployment pipeline, prevents untested models from going live, and logs every change for public record compliance.

The core value of MLOps in these environments is that compliance is built into the process, rather than being retrofitted after the fact. It turns AI into a system that can withstand legal scrutiny without slowing the pace of delivery.

From MLOps to ModelOps and LLMOps

The scope of operational AI is expanding. MLOps, once focused purely on machine learning models, is evolving into two broader disciplines.

ModelOps

Where MLOps handles the engineering side of deploying models, ModelOps expands to cover governance, business alignment, and lifecycle management for all analytical models, machine learning, rules based, optimisation, and beyond. It aligns technical deployment with business value tracking, ensuring models remain aligned with the strategy they support.

LLMOps

The rapid adoption of large language models has created new operational requirements. LLMOps applies the same discipline as MLOps, but focuses on managing prompts, fine tuning, context retrieval, and guardrails to prevent hallucinations or misuse. In 2025, LLMOps is critical for organisations that are embedding generative AI into customer facing products, internal knowledge systems, and decision support tools.

What This Shift Means for C-Suite Leaders?

Enterprises will increasingly run MLOps, ModelOps, and LLMOps in parallel. The winning strategies will integrate these into a single operational framework, with shared governance, observability, and compliance layers. The goal is not to treat these as separate silos, but as parts of a unified model delivery system that covers every AI and analytics asset in the organisation.

Useful Link: How to Implement Artificial Intelligence in DevOps Transformation?

MLOps Best Practices

MLOps best practices are crucial for transforming AI prototypes into production ready systems that deliver real business value. A robust MLOps pipeline aligns data science, engineering, and compliance across every stage of the deployment process.

By following proven machine learning best practices, enterprises ensure accuracy, scalability, and trust. Whether scaling across cloud platforms or applying MLOps for deep learning, success hinges on executing every ML best practice with discipline.

1) Link AI Initiatives to Measurable Business Outcomes

- Sub Focus: Commercial ROI, accountability, strategic alignment

- Why It Matters: AI must prove its value in terms that the business understands, such as revenue growth, cost savings, risk reduction, or customer retention. Models without clear KPIs are the first to be cut in budget cycles.

- ROI Impact: Organisations that align AI projects with business KPIs achieve 3x the ROI of those that do not.

- Example: A global insurer directly tied claims processing models to cost per claim reduction targets, achieving a 22% reduction in processing costs in the first year.

2) Compress Idea to Production Cycles to Under 90 Days

- Sub Focus: Speed to market, competitive advantage

- Why it matters: A deployment delay can erode the first mover advantage. Shorter delivery cycles allow rapid response to market changes and customer needs.

- ROI Impact: Fast cycle MLOps can deliver 20–40% greater market capture in high competition sectors.

- Example: A retail bank utilized automated CI/CT/CD pipelines to roll out a new credit risk model in 74 days, resulting in an 8% reduction in loan default rates within the first quarter.

3) Integrate Compliance Into the Model Lifecycle

- Sub Focus: Regulatory readiness, brand trust, risk avoidance

- Why It Matters: In industries such as healthcare, finance, and government, compliance failures can result in fines, loss of license, or reputational damage. Compliance should be embedded, not retrofitted.

- ROI Impact: Proactive compliance reduces audit preparation costs by up to 50% and shortens approval cycles by weeks.

- Example: A European healthcare provider embedded bias checks and explainability reports into their MLOps pipeline to comply with the EU AI Act, enabling faster rollout of diagnostic models across three countries.

4) Standardise for Enterprise Scale Deployment

- Sub Focus: Replication, cost efficiency, quality control

- Why It Matters: Without standardisation, every AI deployment is a bespoke project, increasing costs and slowing delivery.

- ROI Impact: Shared model registries, feature stores, and governance frameworks can reduce deployment costs by 25 to 30% and halve time to scale.

- Example: A multinational telecom operator created a centralised MLOps platform, enabling models built for one market to be deployed in others with minimal changes, cutting replication time from six months to six weeks.

5) Monitor AI Health in Real Time

- Sub Focus: Operational transparency, risk control

- Why It Matters: Model drift, latency issues, and rising costs can go unnoticed until they impact customers or revenue. Real time monitoring makes issues visible before they escalate.

- ROI Impact: Identifying drift early can help large enterprises avoid financial losses that may amount to tens of millions of dollars annually.

- Example: An e-commerce leader integrated real time drift detection into its recommendation engines, protecting $50M in projected sales during a seasonal campaign.

6) Build for Continuity and Instant Recovery

- Sub Focus: Operational resilience, brand protection

- Why It Matters: AI outages are as damaging as core system failures. Customers expect consistency, and brand damage from failures can linger for years.

- ROI Impact: Automated rollback and retraining workflows reduce downtime related losses by up to 80%.

- Example: A logistics provider used automated failover to switch to backup forecasting models during a data pipeline outage, avoiding service disruptions for 12,000 active shipments.

7) Treat Models as Living Products

- Sub Focus: Continuous improvement, adaptability

- Why It Matters: Models degrade as data and market conditions change. Continuous evolution maximises long term value.

- ROI Impact: Extending a model’s productive life by two to three years can save millions in redevelopment costs.

- Example: A fintech firm implemented quarterly model reviews and retraining triggers, keeping its fraud detection accuracy above 96% for three consecutive years.

Case Study: Building Scalable MLOps Pipelines for Financial Institutions

A leading financial institution, in collaboration with Veritis as their MLOps partner, embarked on a journey to build scalable MLOps pipelines. This strategic partnership enabled the institution to streamline the deployment of its machine learning model, enhance collaboration, and scale efficiently across multiple teams and platforms.

Challenge: The institution struggled to manage and deploy machine learning models at scale, resulting in delayed decision making, inconsistent model performance, and challenges in maintaining regulatory compliance in the financial sector.

Solution: Veritis implemented end to end MLOps pipelines, utilizing cutting edge machine learning tools to automate the model development, testing, and deployment processes. The solution provided a scalable framework that facilitated continuous model updates, better collaboration among data scientists, and faster time to market for financial services.

Results:

- Accelerated model deployment across multiple teams

- Improved model accuracy with automated performance monitoring

- Enhanced scalability to handle large volumes of financial data

This case highlights the significance of MLOps best practices in the financial industry, demonstrating how scalable pipelines can support the dynamic nature of financial services and ensure the continuous delivery of high quality models.

Read the full success story: Building Scalable MLOps Pipelines for Financial Institutions

Conclusion

The accurate measure of artificial intelligence is not in experimentation but in sustained business performance. MLOps provides the structure, governance, and operational discipline required to maintain accuracy, ensure explainability, and enable rapid response to changing conditions without compromising compliance or stability.

Veritis, the esteemed Stevie and Globee Business Awards winner, proudly offers top notch MLOps services to complement your journey. With our expertise, companies can confidently navigate the intricate terrain of MLOps, ensuring that your organization’s machine learning efforts achieve the efficiency and speed required for success. As the demand for MLOps solutions continues to rise, our commitment to delivering top tier services is poised to empower your organization to realize the full potential of your machine learning endeavors.

For organisations seeking to transform AI from a series of initiatives into a long term competitive advantage, Veritis offers the expertise, infrastructure, and operational excellence to make it an enduring part of the business.

Looking for Support? Schedule A Call

Also Read:

- AIOps Use Cases: How Artificial Intelligence is Reshaping IT Management

- Future of DevOps: Top DevOps Trends in 2025 and Beyond

- AIOPS Solutions: Enhancing DevOps with Intelligent Automation for Optimized IT Operations

- Revolutionizing Software Development: The Power of MLOps

- DevOps outsourcing: Things to Know About Before Getting Started

- Demystifying MLOps vs DevOps: Understanding the Key Differences