Until recently, we were all engaged in understanding the software development lifecycle (SDLC) and its various phases, which typically include requirement elicitation, design, development, testing, deployment, and maintenance. During this period, our focus revolved around studying different approaches like the waterfall model, iterative model, and agile models of software development.

Presently, we find ourselves in a phase where nearly every organization is actively working to integrate AI/ML capabilities into their products. This emerging need to develop ML systems introduces or modifies certain aspects of the traditional software development lifecycle (SDLC), leading to a distinct engineering field known as MLOps. A new buzzword has emerged in the tech industry, giving life to novel job roles. MLOps, or Machine Learning Operations, is the abbreviated term for this field, also known as ModelOps.

In 2023, most surveyed organizations are set to improve their investments in MLOps substantially. To provide specifics, 42% of respondents indicated that their organizations plan to boost their spending by 11-25%, while 37% plan to increase it by 26-50%. Additionally, 16% aim for a 51-75% increase, 5% are eyeing a 76-100% surge, and just 2% anticipate a modest 10% or less increment. These figures reflect a significant upward trend in MLOps investment.

Moreover, an overwhelming 98% of respondents intend to raise their investments by at least 11%, with a substantial 58% looking to increase their expenditure by over 25%. These statistics underscore a solid commitment to MLOps and a profound belief in its potential to drive business growth.

Notably, these numbers significantly surpass Gartner’s projection of a 5.1% growth in overall IT-related spending for the year. This divergence underscores the increasing importance and value that organizations attribute to MLOps and its promising future potential.

MLOps is gradually developing into a self-sustained methodology for the entire machine learning lifecycle, encompassing every stage from data collection to governance and ongoing monitoring. As artificial intelligence becomes increasingly integrated into daily business operations, it is transitioning from being a purely innovative endeavor to a standard practice.

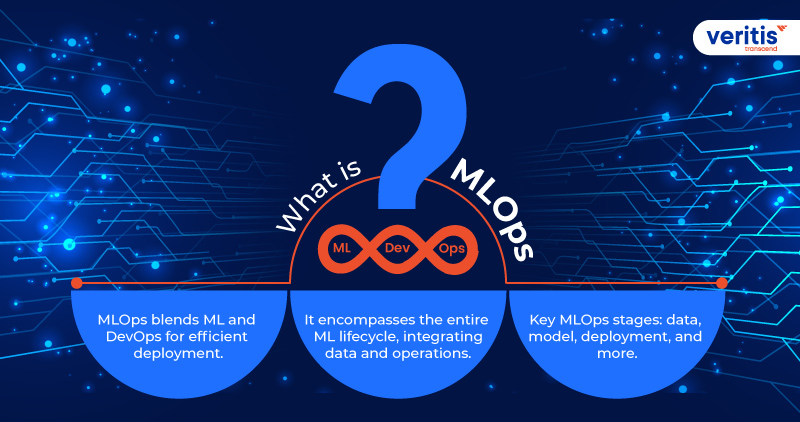

What is MLOps?

MLOps, a portmanteau of “machine learning” (ML) and “operations” (Ops), encompasses a range of management techniques tailored for the lifecycle of deep learning and production ML. It amalgamates ML and DevOps practices with data engineering procedures, all geared toward the efficient and dependable deployment of ML models in production environments and their subsequent maintenance. The core objective of MLOps is to facilitate effective management of the machine learning model lifecycle by promoting communication and cooperation between operations experts and data scientists.

MLOps, with its primary emphasis on the operational aspects of ML models, falls within the broader domain of ModelOps. ModelOps, in turn, encompasses the operationalization of AI models across various domains, encompassing ML models and more.

Machine learning operations, known as MLOps, center their efforts on elevating the quality of production ML while enhancing automation, all while keeping a keen eye on compliance and business prerequisites. This well-rounded emphasis aligns closely with the principles of a DataOps or DevOps approach.

Even though both DataOps and DevOps represent advanced collections of optimal practices, MLOps has evolved distinctively. MLOps has broadened its scope to encompass the entire ML lifecycle, including integration with model generation, continuous integration and delivery, deployment, orchestration, governance, health monitoring, diagnostic analysis, business metrics assessment, and the traditional software development lifecycle.

This has given rise to a self-sustained method for managing the ML lifecycle and fostering an ML engineering culture that applies the best practices of DevOps to the ML landscape, effectively merging the development and operational facets into what we now call MLOps. The continuous drive for monitoring and automation across all ML system development stages, including testing, integration, deployment, release, and infrastructure management, remains a core tenet of practicing MLOps for machine learning operations.

The essential stages of MLOps encompass:

- Data collection

- Data scrutiny

- Data preprocessing

- Model design and training

- Model verification

- Model deployment

- Model surveillance

- Model refinement and retraining.

Useful Link: AIOps Use Cases: How Artificial Intelligence is Reshaping IT Management

What is the Use of MLOps?

MLOps proves invaluable in developing and enhancing machine learning and AI solutions. This approach facilitates productive collaboration between data scientists and machine learning engineers, accelerating model development and deployment through continuous integration and deployment (CI/CD) practices. It also ensures that ML models are appropriately monitored, validated, and governed.

Why MLOps?

Effectively handling models in a production environment can be pretty demanding. To maximize the benefits of machine learning, these models must enhance efficiency in business applications and contribute to informed decision-making as they operate in production. MLOps practices and technology are pivotal in empowering businesses to deploy, oversee, monitor, and regulate their ML operations—companies specializing in MLOps support organizations in optimizing model performance and expediting the automation of machine learning processes.

Significant shifts are occurring in data science practices, with a growing emphasis on incorporating model management and operational functions to secure businesses from the adverse consequences of inaccurate model outcomes. Retraining models with updated datasets is becoming increasingly automated, and timely identification of model drift and issuing alerts when it becomes substantial is paramount.

Furthermore, maintaining the underlying technology of MLOps platforms and enhancing model performance by recognizing when model upgrades are necessary is integral to optimizing model performance.

This transformation doesn’t imply a shift in the responsibilities of data scientists. Instead, it signifies that the practices of machine learning operations are breaking down data silos and fostering a more expansive team approach.

Consequently, this empowers data scientists to concentrate on creating and deploying models, allowing them to prioritize these tasks over making business decisions. Simultaneously, MLOps engineers take charge of the management of ML models that are already operational.

Why Do We Need MLOps?

Bringing machine learning into production is a challenging task. The machine learning lifecycle involves numerous intricate stages like data ingestion, data preparation, model training, fine-tuning, deployment, monitoring, explainability, and more. Additionally, it demands close collaboration and seamless handovers across various teams, from Data Engineering to Data Science to ML Engineering.

Maintaining operational excellence is crucial to ensure these processes run smoothly and harmoniously. MLOps encompasses the entire journey of experimenting, iterating, and continuously enhancing the machine learning lifecycle.

Useful Link: Derive ‘ROI’ from ‘DevOps’: An Overview of Performance and Metrics

How to Implement MLOps

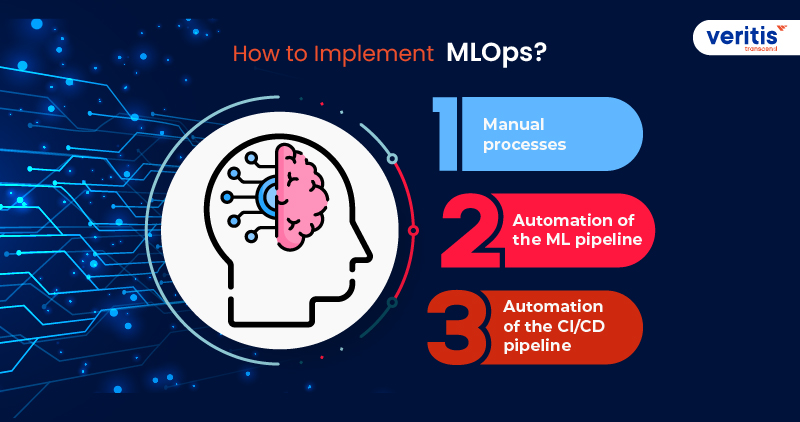

Google suggests three approaches for implementing MLOps:

- MLOps Level 0: Manual processes.

- MLOps Level 1: Automation of the ML pipeline.

- MLOps Level 2: Automation of the CI/CD pipeline.

1) MLOps Level 0

This scenario is common for organizations in the initial stages of adopting machine learning. An utterly manual workflow and a process driven primarily by data scientists can suffice when your models undergo infrequent changes or training.

Features

- A Manual, Script-Dependent, and Interactive Procedure: each phase involves manual efforts, encompassing data analysis, preprocessing, model training, and validation. It necessitates hands-on execution of every step and manual progression from one phase to the next.

- Disconnection Between ML and Operational Aspects: The workflow segregates the data scientists responsible for model creation from the engineers in charge of implementing the model as a prediction service. Data scientists provide the engineered team with a trained model as a deployable component for their API infrastructure.

- Sparse Release Iterations: The premise is that your data science team oversees a small number of models that undergo infrequent changes, either in model implementation or retraining with fresh data. A new model version is rolled out only a few times annually.

- Absence of Continuous Integration (CI): Due to the assumption of minimal implementation changes, Continuous Integration is overlooked. Typically, code testing is integrated into the notebooks or script execution process.

- Lack of Continuous Deployment (CD): Continuous Deployment is not considered since frequent model version deployments are scarce. Deployment pertains to the prediction service, a microservice featuring a REST API.

- Lack of Continuous Performance Monitoring: The process does not oversee or document model predictions and actions. The engineering team could possess an intricate system for configuring, testing, and deploying the API, encompassing security, regression, load testing, and canary testing.

Challenges

In practical situations, deployed models frequently encounter issues when operating in real-world scenarios. These models struggle to adjust to shifts in environmental dynamics or alterations in the data characterizing the environment. Forbes features an insightful article on this topic, exploring the reasons behind the crash and burn of machine learning models in production.

Adopting MLOps practices for Continuous Integration and Continuous Testing (CI/CT) is highly beneficial to tackling the issues associated with this manual workflow. Implementing an ML training pipeline facilitates Continuous Testing while establishing a CI/CD system allows for the swift testing, development, and deployment of fresh iterations of the ML pipeline.

Useful Link: Revolutionizing Software Development: The Power of MLOps

2) MLOps Level 1

The primary objective of MLOps level 1 is to accomplish ongoing model training (CT) through the automation of the ML pipeline. This results in the consistent provision of the model prediction service.

This situation can prove advantageous for solutions operating within dynamic environments, where the need to preemptively respond to alterations in customer behavior, pricing structures, and other indicators is essential.

Features

1) Swift Experimentation: ML experiment stages are coordinated and executed automated.

2) Continuous Model in Production: Continuous model training in a production environment involves automatic model training, leveraging real-time data triggered by live pipeline events.

3) Symmetry Between Experimentation and Operation: The pipeline implementation utilized in the development or experimental environment is also employed in the preproduction and production environments, serving as a fundamental component of MLOps practices that align with DevOps principles.

4) Modularized Coding for Components and Pipelines: It is essential for building ML pipelines. These components should be designed to be reusable, combined, and potentially shareable across different ML pipelines, often achieved by employing containers.

5) Continuous Model Delivery: Automation is applied to deploying the model, enabling it to serve as a prediction service for online predictions once it’s trained and validated.

6) Pipeline Deployment: In MLOps level 0, you deploy a trained model as a prediction service in the production environment. At level 1, the deployment involves the entire training pipeline, which operates automatically and periodically to deliver the trained model as the prediction service.

Useful Link: Demystifying MLOps vs DevOps: Understanding the Key Differences

Additional Components

Data and Model Validation

Data and model validation are integral components of the pipeline. This process relies on incoming, real-time data to generate a fresh model version trained on this new data. Consequently, automated data validation and model validation steps are indispensable within the production pipeline.

Feature Repository

A feature repository is a centralized location for standardizing the definition, storage, and access of features used in training and serving contexts.

Metadata Management

Records detailing every instance of the ML pipeline’s execution are maintained to support data and artifact lineage, reproducibility, comparisons, and error and anomaly debugging.

ML Pipeline Activation

The automation of ML production pipelines allows for the retraining of models with fresh data based on specific criteria that align with your use case. These criteria may include:

- Triggered on request

- Scheduled intervals

- Availability of new training data

- Degradation in model performance

- Substantial shifts in data distribution reflect evolving data profiles.

Challenges

This configuration is well-suited for deploying new models by leveraging fresh data rather than implementing entirely new ML concepts.

Nonetheless, when you want to experiment with novel ML concepts and swiftly roll out new iterations of ML components, you must establish a CI/CD infrastructure. This CI/CD setup automates constructing, testing, and deploying ML pipelines, which is especially valuable when handling numerous ML pipelines in a production environment.

3) MLOps Level 2

Implementing a robust automated CI/CD system is imperative to ensure swift and dependable updates of production pipelines. With this automatic CI/CD system, your data scientists can efficiently experiment with new ideas related to feature engineering, model architecture, and hyperparameters.

This level of MLOps adoption is tailored for technology-driven companies that must retrain their models daily, if not hourly, update them within minutes, and redeploy them across thousands of servers simultaneously. Surviving without a comprehensive end-to-end MLOps framework would be challenging for such organizations.

This MLOps setup comprises the following key components:

- Source control

- Testing and building services

- Deployment services

- Model registry

- Feature store

- ML metadata repository

- ML pipeline orchestrator

Useful Link: Successful Digital Transformation: CEO’s Path to Digital Transformation Success

Features

1) Development and Experimentation: Development and experimentation involve an iterative process of experimenting with new ML algorithms and modeling approaches, with orchestrated experiment steps. The output of this stage is the source code for the individual steps of the ML pipeline, which is subsequently uploaded to a source repository.

2) Pipeline Continuous Integration: Pipeline continuous integration encompasses building source code and executing various tests. The results of this phase include pipeline components such as packages, executables, and artifacts, which are slated for deployment in subsequent stages.

3) Pipeline Continuous Delivery: In the continuous delivery phase of the pipeline, the artifacts generated in the CI stage are deployed to the intended environment. The outcome of this stage is the deployment of a pipeline featuring the new model implementation.

4) Automated Triggering: Automated activation involves the automatic execution of the pipeline in a production environment, either following a predefined schedule or in response to a trigger. This stage generates a freshly trained model that is then pushed to the model registry.

5) Model Continuous Delivery: In the continuous delivery of models, the trained model is made accessible as a prediction service for generating predictions. The outcome of this stage is the deployment of a model prediction service.

6) Monitoring: Monitoring involves gathering performance statistics for the model using real-time data. The result of this stage is a trigger that initiates the execution of the pipeline or the commencement of a new cycle of experimentation.

Data analysis remains a manual procedure carried out by data scientists before the pipeline initiates a new experimental iteration. Likewise, the analysis of the model is also conducted manually.

Challenges

MLOps level 2 offers agility but brings challenges like resource strain due to frequent retraining, the need for precise coordination between development and production, and the complexity of managing feature stores and metadata. Maintaining the demanding pace while ensuring data integrity is vital.

Addressing continuous experimentation and development challenges entails automating data analysis and model assessment for optimal MLOps level 2 utilization. Ensuring dependable automated triggering and monitoring mechanisms is crucial for model stability. Striking the appropriate balance between innovation and resource management is vital for tech companies aiming to excel in dynamic environments.

Conclusion

Data analysis remains a manual process for data scientists before starting a new experiment. However, MLOps serves as a valuable compass, guiding individuals, small groups, and even companies as they strive to reach their objectives. It’s a versatile tool that can accommodate various constraints, whether limited data, modest infrastructure, a tight budget, and more.

The beauty of MLOps is that it’s a no-brainer; you have the flexibility to determine the scope of your guidance. Experiment with different configurations and retain what best suits your needs. Furthermore, it’s worth mentioning that Veritis, the esteemed winner of the Stevie and Globee Business Awards, proudly offers top-notch MLOps services to complement your journey.

Looking for Support? Schedule A Call

Also Read:

- How to Implement Artificial Intelligence in DevOps Transformation?

- Future of DevOps: Top DevOps Trends in 2023 and Beyond

- AIOPS Solutions: Enhancing DevOps with Intelligent Automation for Optimized IT Operations

- Top 9 Digital Transformation Trends in 2023

- DevOps outsourcing: Things to Know About Before Getting Started

- Fortifying Your CI/CD Environment: Best Practices for Defending Against Security Threats