Technology advancements have taken the production abilities of firms to different heights almost across every industry.

Days are gone where we use to see only human-intensive tasks!

Now, the world is high on technology-driven systems that eased industry processes, from developing a product to releasing it to the market and further towards offering an unforgettable experience to end-users.

DevOps is one technology solution most heard in today’s tech world, especially for enhanced collaboration among teams and offering faster execution with less failure and a high recovery rate.

For the IT industry, DevOps appeared as a solution for advanced abilities such as continuous integration, continuous delivery and faster innovation rate, among others that can fasten the software process chain. That’s not an end!

There are two of today’s most advanced technologies that every other technology or industry wants to scale up their performance and productivity. While some of the leading market players are already running on them, there are many medium and small sizes still running for them.

They are undoubtedly Artificial Intelligence (AI) and Machine Learning (ML)!

It’s no surprise that any AI-powered, ML-capable system holds high regard in today’s smart world!

Applying static tools for deployments, provisioning, and Application Performance Management (APM) has already seen its full potential and is in fact, getting absorbed by the ever-growing industry demands.

The next quest has already begun for creative managing tools that can apply intelligence simplifying the task of development and testing engineers. Here is where Ai and ML play vital roles!

In this article, we will see how AI and ML integrations can power DevOps. In brief, AI and ML help DevOps by automating routine and repeatable tasks, offering enhanced efficiency and minimizing teams’ time spent on a process. Let’s look into more details!

Applying Artificial Intelligence (AI) to DevOps

Data revolution is one key aspect that is posing serious challenges to the DevOps environment.

Scanning through huge volumes of data to find a critical issue as part of day-to-day computing operations is time-consuming and human-intensive.

That’s where Artificial Intelligence has its role in computing, analyzing and making an immediate decision that a human might take hours together to decide on.

With the evolution of DevOps, two different teams started collaborating on a single platform, which needs effective tools that can reduce the occurrence of errors and revisits a problem.

AI can transform the DevOps environment in various ways such as:

- Data Accessibility: AI can increase the scope of data access to the teams who typically face issues such as lack of freely-available data. AI enhances the teams’ ability to gain access to huge volumes of online data beyond organizational limits for big data aggregation. It helps teams to have well-organized data scanned from widely-available datasets for consistent and repeated analysis.

- Self-governed Systems: Adaptation to change is one key limiting that many firms have been facing owing to a lack of proper analytics that limit themselves to certain borders. Whereas, AI has changed the scenario bringing in a transition in analysis from being human to self-governed. Now, self-governed tools can drive many operations that humans might not be able to that easily.

- Resource Management: Enhancing scope for the creation of automated environments that run automate many routine and repeatable tasks, AI transformed the process of resource management opening more avenues for innovation and making new strategies.

- Application Development: AI’s ability to automate many of the business processes and empower data analytics is likely to have a bigger impact on the DevOps environment. Many firms have already begun adopting AI and Machine Learning for achieving efficiency in application development.

AI can help your teams in exactly identifying the solution to your problem from a dataset instead of spending hours together on huge data volumes. This not only saves time but also minimizes the amount of work required by almost half.

Applying Machine Learning (ML) to DevOps

Machine Learning basically refers to the application of AI in the form of programs or data sets within machines in the form of programs or algorithms.

Habituating systems to automated learning capabilities speaks the effective implementation of ML capabilities, which actually means making it culture the ‘practice of continuous learning’.

This makes it easy for the teams in dealing with complex aspects such as linear patterns, massive datasets, query refining, and identifying new insights on a continuous basis at the speed of their executing platform.

Being part of the process chain, ML helps in the easy fixing of bugs and also plays a vital role in making frequent modifications to the overall code in a hassle-free manner.

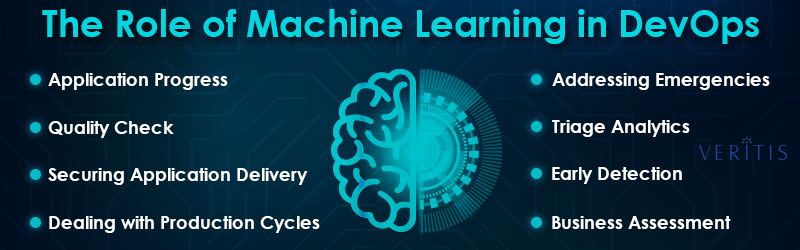

Following are key areas where ML integration means a lot for DevOps:

- Application Progress: While DevOps tools such as ‘Git’, Ansible, among others provide the visibility of delivery process, applying ML to them addresses the irregularities around code volumes, long build time, delays in code check-ins, slow-release rate, improper resourcing and process slowdown, among others.

- Quality Check: With a detailed analysis of testing outputs, ML performs efficient review Quality Assessment results and builds test pattern library based on discovery. This keeps alive the comprehensive testing in case of every release, thus enhancing the quality of applications delivered.

- Securing Application Delivery: Securing application delivery is one key advantage that ML integration offers to DevOps. With ML in place, it’s easy to identify user behavior patterns thus avoiding anomalies in the delivery chain. These might lead to access to anomalous patterns to key areas such as system provision, automation routines, repositories, deployment activity and test execution, among others. Stealing of intellectual property and inclusion of unauthorized code in the process chain are among the most-common deviating/bad patters.

- Dealing with Production Cycles: DevOps teams typically use ML to understand and analyze resource utilization, among other patterns to detect possibilities of abnormal patterns such as memory leaks. ML’s advantage of having a better understanding of application or production makes it more apt in managing production issues.

- Addressing Emergencies: Here is the key role of ML because of its ability to analyze machine intelligence. ML manages well with the production chain especially in dealing with sudden alerts by training systems continuously on identifying repeating patterns and inadequate warnings, thus filtering the process of sudden alerts.

- Triage Analytics: ML has its own way of dealing with analytics, where it can easily prioritize known issues and some unknown ones often. ML tools can help you identify issues in general processing and also manage release logs to create coordination with new deployments.

- Early Detection: ML tools give Ops teams the capability to detect an issue at an early stage and ensure quicker response times allowing business continuity. It can create all key patterns that can analyze and also predict user behavior, such as analyzing configuration to meet the expected performance levels, response rate and duration of success for a brand-new nature and keep a continuous check on factors that can impact customer engagement.

- Business Assessment: Not just supporting the process development, ML also has a key role in ensuring business continuity for an organization. While DevOps pays high regard to understand of code release for achieving business goals, ML tools deal that with its pattern-based functionality by analyzing user metrics and alert the concerned business teams and coders in case of any issue.

Over, Machine Learning (ML) can help DevOps in:

- IT Operations Analytics (ITOA)

- Predictive Analytics (PA)

- Artificial Intelligence (AI)

- Algorithmic IT Operations (AIOps)

After having known the advantages that an AI and ML offers to your DevOps environment, the next look will be for steps to implement ‘AI and ML in DevOps’.

Here are seven steps that should be assessed to make DevOps environment an AI/ML-driven:

- Adopting Advanced APIs: Moving development teams to gain hands-on experience in working with canned APIs like Azure, AWS and GCP that allow the deployment of robust AI/ML capabilities into their software without having to create self-developed models. Further, they can focus on integrating add-ons such as voice-to-text and other advanced patterns.

- Identifying Related Models: The next step after the above would be identifying similar AI/ML APIs. Doing this, development becomes easier with successful ML/AI model deployments and individual teams can work on further enhancements and apply the same to additional use cases.

- Parallel Pipeline: Given the fact that AI and ML are at the experimentation stage, it will be important to also consider running parallel pipelines so that things won’t go bad in case of any failure or sudden halts. The better way to deal with would be adding ML/AI capabilities in a step-wise manner, gradually in line with the projects’ progress avoiding significant delays.

- Pre-trained Model: A well-documented, pre-trained model can drastically cut down the threshold for adoption of ML and AI capabilities. A pre-trained model can be helpful in recognizing user behavior or inputs in a specific search. If it can at least match the basic aspects of the user search pattern, further add-ons to it can yield better results that can fully match with the user behavior pattern. So, having a pre-trained model is key to AI/ML adoption at an initial phase.

- Public Data: Finding the initial training data is a key challenge in adopting AI/ML. None would actually feed this data. So, where will you drive information from? That’s where you require public data sets. It may not exactly meet your full requirements but can at least fill the gaps to enhance project viability.

- Due Identity: One starts witnessing the true potential of ML/AI only after the software runs and shows completion at a high rate, quality and performance compared to the traditional approach. So, it will be important for any organization to identify and forward the success stories that they see out of AI/ML adoption to further teams keeping them updated.

- Broaden Horizons: Developers should ideally be in continuous quest of knowing new and staying updated. This applies more to AI/ML use cases. For this, organizations should encourage teams by easing their ways to access MI/AL sandboxes and general-purpose APIs without additional formalities that come as part of the corporate procurement process.

In Conclusion

Overall, AI/ML is something that has arrived to bridge the long gap between the humans and huge volumes of data otherwise Big Data.

Is it not useful to have a tool that can give you a consolidated solution derived from widely-available similar scenarios over the web, instead of you disturbing your entire software environment and applications just for a single log entry from heaps of log data entries?

Balancing the human capabilities to catch the velocities of expanding data scope and giving them operational intelligence that can easily deal with the scenario is what AI and ML capabilities do, which you might require in your DevOps environment.

So, the solution in front is to have a system that can mimic user behavior, be it searching, monitoring, troubleshooting, interacting with data, among other user behavior patterns, instead of analyzing data that exists in unmeasurable volumes.

Go ahead! It’s time you make your DevOps environment, AI-powered and ML-driven!