The ultimate goal of DevOps is to promote strong collaboration between lines of business, development, operations, and IT frameworks. Though the DevOps movement has gained massive footprint across IT organizations, significant changes continue to set afoot in the field, making it hard to stay abreast of the trend.

There is a constant influx of new ideas on improving software delivery, making it faster, efficient, and quality focused.

New disciplines, such as software delivery management, tools, and protocols are introduced now and then, challenging the organizations to adopt enterprise-level DevOps successfully. As tactics and challenges keep changing at every stage of the DevOps journey, even the most promising efforts ultimately fail to complete full DevOps adoption.

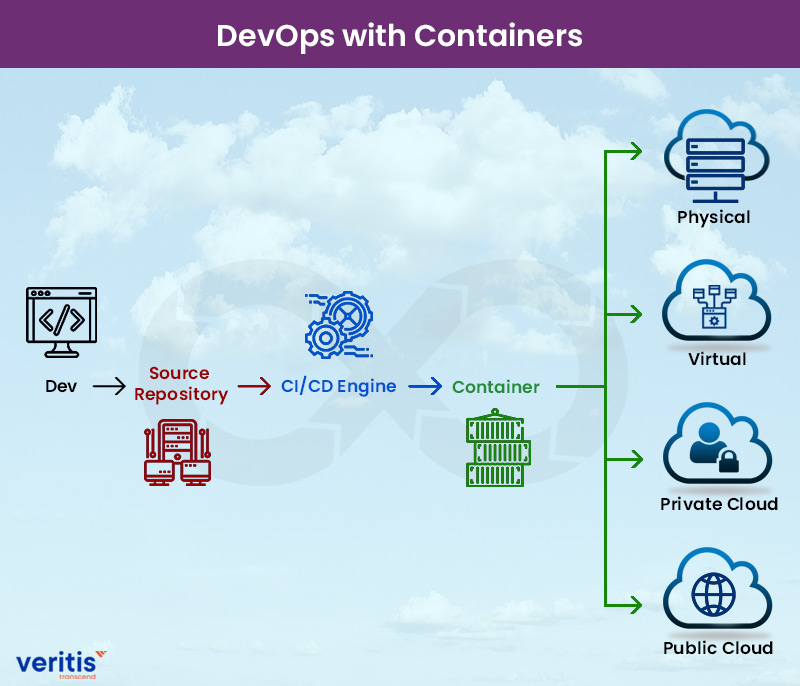

To achieve successful business value through DevOps, many organizations have turned to containers to simplify the build/test/deploy pipelines in DevOps.

Containerization for DevOps

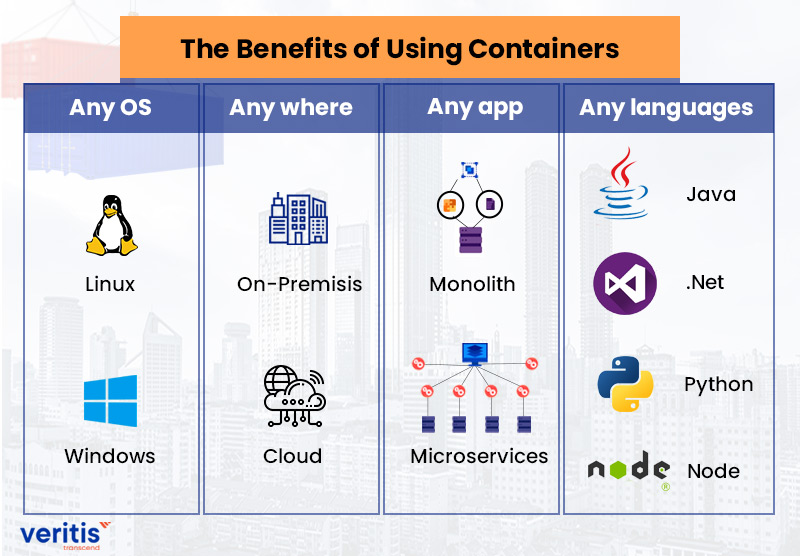

Containers are a lightweight and portable unit of software in which application code is packaged, along with its OS, libraries, and dependencies. Thus, the software can run anywhere, whether it be on the desktop, laptop, traditional IT, or the cloud, without any need of re-configuration.

With containers, developers can easily share their software and dependencies with IT operations while eliminating the typical application conflicts between different production environments. The developers own the container’s content, application, interdependencies, and components, while the operations team manages production environments and infrastructure to ensure deployed applications are appropriately delivered. Thus, containers indirectly bring developers and IT operations closer together, promoting effective collaboration.

How Can Containers Accelerate Your DevOps Journey?

By simplifying the process of designing, testing, and deploying software from a developer’s computer to various production environments, containers in DevOps benefits in the following ways:

- Improved flexibility

- Improved operation consistency

- High production deployment

- Enhanced development pipeline

- Optimal resource utilization

- High platform independency

- Greater scalability

- High modularity and security

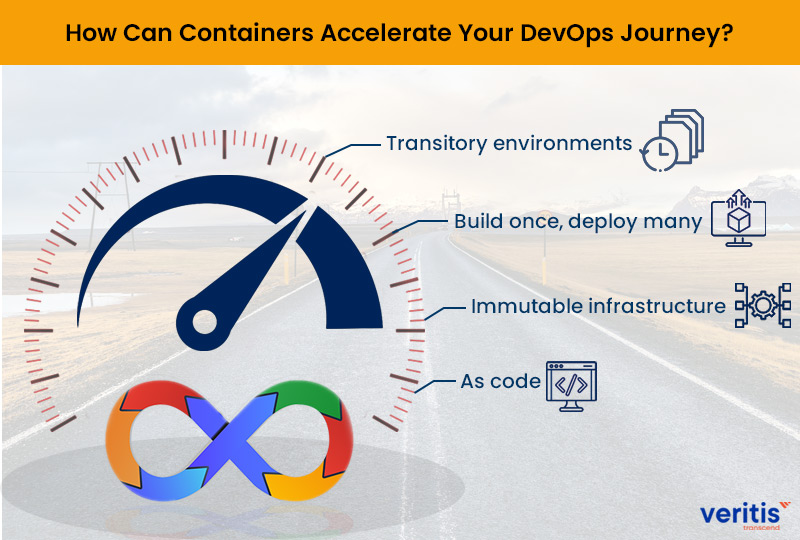

When containers are fused with DevOps practices, they help organizations drive their DevOps journey in the following ways:

1) Transitory environments

Containers are quite suitable for ephemeral or transitory environments as they are lightweight, portable, quickly deployable, and require fewer resources. Containers can be spun up, leveraged to execute specific activities such as continuous testing, and recycled once the activity is closed. This can be repeated each time a new environment is needed.

2) Build once, deploy many

Rather than compiling and rebuilding your application for every environment you plan to deploy in, DevOps facilitates organizations to build the application binary only once and deploy the same binary file from Integration to Test and then to Production. This reduces the deploy time variables that could lead to errors.

Containers boost the “build once, deploy many” process through the image, which is an immutable artifact used by a container engine to build a new container.

3) Immutable infrastructure

The concept of Immutable infrastructure is relatively new in the DevOps ecosystem. Instead of updating the infrastructure, a whole new infrastructure set is built for every deployment based on the update requirements. And the previous infrastructure becomes obsolete.

This approach to DevOps benefits in the following ways:

- Reduced downtime

- Thorough testing

- Automated failure recovery

- Quick deployments and easier rollback

Containerization in DevOps naturally supports the concept of immutable infrastructure. Container images are immutable artifacts, which allows the container to be deployed in the same manner regardless of the level of the environment – Dev, Test, or Prod.

4) As code

The ‘As Code’ concept applied to infrastructure is one of the critical DevOps principles. It is the practice of describing and managing infrastructure, including networks, virtual machines, load balancers, and connection topology, in a descriptive model, similar to the version the DevOps team uses for source code.

Containers also support and leverage the ‘as code’ concept. At a container level, a Dockerfile is used to define the container in code. It contains command-line calls that can be used to automatically create a Docker image, which, in turn, can be used to create container instances.

Kubernetes is another area where the container supports the ‘as code’ concept. The Kubernetes configuration is defined using YAML, a human-readable data serialization language.

Thus, containers support a key DevOps concept that facilitates greater automation, version control, repeatable outcomes, and a faster pipeline.

In Conclusion

Containers can significantly drive DevOps maturity, but configuring them properly, managing them securely, and deploying them successfully may prove challenging.

A perfect container strategy, with proper tools and technology, can offer speed, agility, efficiency, and resource reduction.

Looking for a Containerization Consulting Partner? Veritis can help through our unmatched IT expertise, experienced guidance, and best practices.

We offer a lifecycle of Container Services using a host of containerization tools, including Docker, HELM, Kubernetes, Amazon ECS, Amazon EKS, Amazon Fargate, and Docker Compose.

More Articles:

- Best 2021 US DevOps Conferences

- DevOps Transformation: Approach, Best Practices and Business Benefits

- Cloud Implementation Services: Strategy, Solutions and Benefits

- How DevOps Boost Business Deliveries During COVID-19?

- Cloud Governance Services: Best Practices and Benefits

- DevOps Market To Be Worth USD 17 Billion By 2026!