Kubernetes is a widely used platform in the modern technological landscape that enables businesses to deploy and manage applications at scale. The container orchestration platform streamlines infrastructure provisioning for microservice-based applications, which supports effective workload management through modularity. Kubernetes enables various deployment resources to construct CI/CD pipelines using updates and versioning. Even though rolling updates are the default deployment strategy in Kubernetes, some use cases necessitate a novel method of delivering or updating cluster services.

When it comes time to deploy your production-ready containerized apps into Kubernetes infrastructure, selecting a deployment strategy is necessary. Three options are available to you: rolling (the default), canary releases, and blue/green deployments. Developers frequently employ these deployment techniques to smoothly and securely implement new code modifications in production environments. In addition, deploying new versions of your applications is simple with Kubernetes autoscaling.

What is Kubernetes Deployment Strategy?

In Kubernetes, a deployment is a resource object that specifies the final state of our program. Deployments are declarative; thus, we don’t specify how the state should achieve them. Instead, we define the desired state and let the deployment controller take over from there, completing the task automatically and to the best of its ability. With Kubernetes deployment tools, we can specify an application’s life cycle, including the images to use, the number of pods that should exist, and how they should be updated.

Using an automated Kubernetes deployment strategy, you can declaratively create pods and replica sets. When a desired state is specified, an automated Kubernetes deployment controller continuously checks the status of the pertinent resources and deploys pods to match the intended state. It is essential to Kubernetes autoscaling.

A k8s deployment strategy specifies how to develop, upgrade, or downgrade various Kubernetes infrastructure application versions. In a conventional software context, application deployments or upgrades cause service interruption and downtime. However, you may avoid this with Kubernetes integration, which offers several deployment techniques that enable you to upgrade numerous application instances while avoiding or minimizing downtime.

Your chosen Kubernetes autoscaling deployment method will determine how your applications are updated from an earlier version to a newer one when you deploy them to a K8s cluster. Some solutions include downtime, in addition to introducing testing principles and enabling user analysis. Two primary K8s deployment methodologies are frequently employed: recreating and rolling.

Applications are declaratively updated by Kubernetes services using k8s deployment resources. Cluster administrators specify an application’s lifecycle and how associated updates should be carried out through deployments. Kubernetes integration deployment provides an automated method for cluster objects and applications to reach and keep the intended state. In addition, applications may be updated in a repeatable and safe manner using the Kubernetes back end, which automatically automates the Kubernetes deployment tools process.

With Kubernetes deployments, cluster admins can:

- Set up a replica set or pod.

- Maintain pods and replica sets.

- Revert to previous versions.

- Deploys while pausing or continuing.

- Mass deployments.

Useful link: Managing Kubernetes Applications Through Terraform and AWS EKS

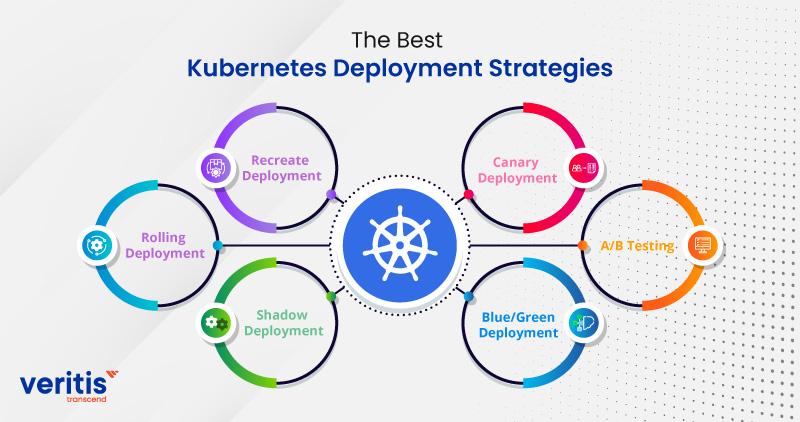

The Best Kubernetes Deployment Strategies

We’ll review several Kubernetes deployment strategies. It is crucial to understand that the Kubernetes Deployment object only supports Recreate and Rolling deployments by default.

Blue/green or other forms of deployment can be carried out in Kubernetes orchestration, but this will necessitate customization or specific tooling.

1) Recreate Deployment

A recreate deployment technique is an all-or-nothing method that instantly lets you update an application with some downtime.

The deployment’s current pods are terminated, and a new version is installed using this technique. Moreover, there is a downtime between when the old version is taken down and when the new pods successfully start up and respond to user queries.

A recreate technique is appropriate for development environments or when users prefer brief downtime over a protracted period of poor performance or faults (which might happen in a rolling deployment). The more significant the update, the more likely you are to have issues with rolling updates.

Recreate deployments are applicable in the following other scenarios:

- It is possible to plan maintenance work for off-peak hours (for instance, during the weekend for an application only accessed during business hours).

- Due to technological limitations, running two instances of the same software is impossible. You can stop using the older version and only then start the new one with a recreate deployment.

2) Rolling Deployment

A rolling deployment approach upgrades an application instance to a new version. The target environment’s nodes are incrementally updated to a new version in pre-specified batches. As a result, rolling deployments call for two versions of a Service: one for the older version of the Kubernetes application and another for the more recent version.

A rolling deployment has the benefits of being simpler to implement, easier to turn back, and less hazardous than an entire deployment.

It can be slow; if something goes wrong, there is no simple way to revert to a previous version. Also, it implies that various parallel versions of your application might be operating concurrently. As a result, you might be forced to utilize a recreate method for legacy apps.

3) Shadow Deployment

Shadow deployments are a different kind of canary deployment in which you test a new release using workloads from a production system. Without the end user’s intervention, a shadow deployment divides traffic between an existing version and a new one. Operators start a comprehensive deployment as soon as the stability and performance of the new version satisfy predetermined standards.

Shadow deployments provide the benefit of allowing for the testing of new versions’ non-functional features, such as stability and performance. However, the drawback of shadow deployments is that they are challenging to maintain and use twice as many resources as a traditional deployment.

Useful link: How to Optimize Kubernetes Autoscaler to Better Business

4) Blue/Green Deployment

Using a blue/green (or red/black) deployment method, you can deploy a new version without experiencing downtime. Green denotes the new version of the application, while blue denotes the existing one.

Using this method, one version is always active. While a green deployment is being created and tested, traffic is routed to a blue one. After the testing process is finished, you begin directing traffic to the new version. The blue deployment can either be decommissioned or kept for a future reversal.

A blue/green deployment saves downtime and lowers risk since, if a problem arises during the deployment of the new version, you can roll back to the prior version. Furthermore, because the complete Kubernetes application state is changed in a single deployment, versioning problems are also avoided.

Unfortunately, this tactic can be expensive and needs doubling the resources for both deployments. It also needs a means to quickly change traffic from the blue to the green version and back.

5) A/B Testing

A/B testing in the context of Kubernetes orchestration refers to canary deployments that route traffic to various versions of an application based on specific criteria. For instance, A/B testing can target certain users based on cookies, user agents, or other parameters, unlike a standard canary deployment that routes users based on traffic weights.

A/B testing in Kubernetes helps determine which version of the new feature customers prefer before distributing it to all users.

6) Canary Deployment

A canary deployment method allows you to test a new application version with actual users before committing to a complete rollout. Initiating a phased deployment entails employing a progressive delivery approach. Canary deployment tactics cover several deployment methods, such as A/B testing and dark launches.

A canary technique often involves deploying a new application version to the Kubernetes autoscaling cluster incrementally while testing it using a limited amount of real-world traffic. The prior version available to all other users allows you to test a significant upgrade or experimental feature on a small group of active users.

A canary deployment necessitates the use of two nearly identical ReplicaSets, one for rolling out new features to a small subset of users and the other for all active users. Once you have more confidence, you can progressively push out the new version to the entire infrastructure. However, until the canary version becomes the new production version, all live traffic will be directed to the canaries.

Similar to a blue/green deployment, there are two drawbacks: A Kubernetes application that can run multiple versions simultaneously and a clever traffic system that can route a portion of requests to the new version.

Useful link: Kubernetes Adoption: The Prime Drivers and Challenges

Conclusion

The technology’s basic capabilities include Kubernetes objects, which enable quick delivery of application features and updates. As a result, Kubernetes admins can set up a productive versioning system to manage releases while guaranteeing minimal application downtime with deployment resources. In addition, administrators can scale up infrastructure to accommodate increasing workloads or roll back to previous versions of pods using deployments.

Administrators can direct traffic and requests towards particular versions using the sophisticated Kubernetes deployment strategies, facilitating live testing and error handling. Before administrators and developers submit the changes, these techniques ensure that newer features function as intended.

Although deployment resources are the cornerstone of a persistent application state, it is always advisable to carefully consider when selecting the best deployment strategy, creating good rollback options, and considering the ecosystem’s dynamic nature, which depends on numerous loosely coupled services. This is where Veritis comes in.

Veritis, the Stevie Award winner, has the expertise to create a fantastic solution for Kubernetes services. Contact us with your requirements and experience with Kubernetes’ deployment strategies.

Got Questions? Schedule A Call

Also Read:

- EKS Vs. AKS Vs. GKE: Which is the right Kubernetes platform for you?

- Kubernetes Vs. OpenShift: Which One Should You Choose?

- The State of Kubernetes 2020 Report: Kubernetes Adoption Stands at 48%

- DevOps Security: Challenges and Best Practices

- Derive ‘ROI’ from ‘DevOps’: An Overview of Performance and Metrics

- ‘Kubernetes-as-a-Service’ for Container Infrastructure