MLOps is more than just a trendy term in the AI and ML community. It has become evident to many companies that they must adopt a structured approach to streamline the development and deployment of models, and MLOps serves as the solution to this imperative.

Adopting MLOps may appear daunting, and it’s widely acknowledged that many organizations face initial challenges. MLOps solutions aim to enhance the efficiency and speed of turning machine learning into usable products, but the process can be challenging for many.

In 2023, MLOps is gaining momentum, with 90% of organizations investing in it, as per Gartner. MLOps is the top priority for 60% of organizations, according to IDC. Organizations leveraging MLOps are three times more likely to deploy models successfully (Deloitte), and MLOps can save up to 50% in time and resources (Forrester).

The MLOps market is projected to reach USD 3.1 billion by 2025, as indicated by Grand View Research. These statistics underscore the growing significance and adoption of MLOps in the machine learning field.

MLOps offers a comprehensive solution by simplifying, standardizing, and automating the entire process of ML model development and deployment, spanning the full spectrum of the data science lifecycle. This encompasses not only the initial stages but also the critical aspects of post-deployment monitoring and ongoing maintenance of ML models.

While data scientists have access to various tools to aid in implementing MLOps solutions within their organizations, a staggering 87% of machine learning models face a formidable roadblock on their journey to production. This is primarily attributed to the technical hurdles associated with model development and deployment, alongside overarching organizational challenges. To ensure the success of your ML projects, it’s imperative to integrate MLOps best practices into your workflow, enabling you to propel forward swiftly and with optimal efficiency.

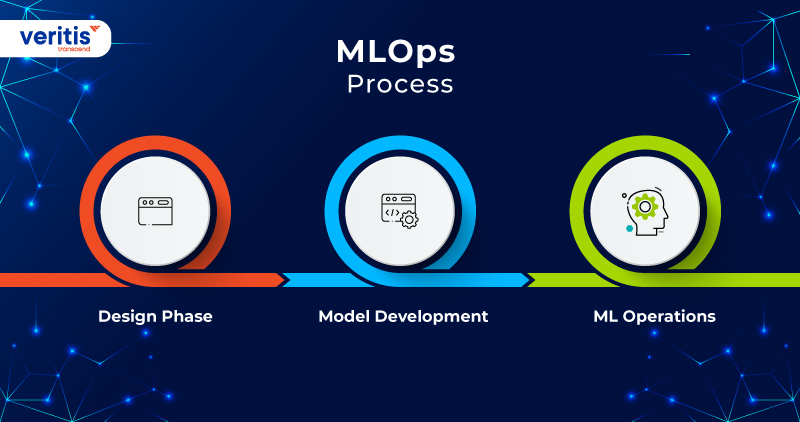

MLOps Process

The MLOps process unfolds in three phases: design, model development, and operations. Let’s delve into a comprehensive exploration of each facet of the MLOps solutions process.

1) Design Phase

The design phase within the MLOps solutions process serves as the foundational stage for crafting ML-powered applications. Here, a comprehensive business and data comprehension is established about the ML pipeline model.

This phase involves the identification of the target user base, strategic development of ML solutions to tackle the core issue, and a forward-looking assessment of the project’s evolution. ML projects are meticulously structured to align with one of two primary objectives: enhancing user productivity or augmenting the overall interactivity of the application.

At the outset, ML use cases are clearly defined, allowing for effective prioritization. It is common practice to tackle ML projects sequentially, focusing on a single use case at a time. Furthermore, the design phase entails meticulously examining the pertinent data required for training, coupled with delineating functional and non-functional prerequisites for the ML pipeline model.

These stipulated requirements form the blueprint for the architecture of the ML application and serve as the foundation for constructing a comprehensive test suite for the subsequent array of ML models.

To encapsulate, the design phase within the MLOps process undertakes the following key responsibilities:

- Requirements engineering

- Prioritizing ML use cases

- Verifying data availability

2) Model Development

The subsequent stage is the model development phase, which involves executing experiments by implementing a proof-of-concept ML model. These experiments are conducted to assess the model’s suitability for solving the problem.

It encompasses a series of crucial steps, including the refinement of the ML algorithm, meticulous data preparation, and the optimization of the model engineering procedures. The ultimate objective of this phase is to deliver a robust and reliable ML pipeline model that can seamlessly transition into the production environment.

To encapsulate, the model development phase encompasses the following critical functions:

Data engineering: Preparing and optimizing data for modeling.

Model engineering: Enhancing and refining the ML model.

Creation of a proof-of-concept ML model: Implementing and assessing an initial model.

Model testing and validation: Thoroughly examining and confirming the model’s performance.

3) ML Operations

The final phase involves deploying the ML model into a production environment, utilizing established DevOps practices like rigorous testing, version control, continuous delivery, and ongoing monitoring.

This third phase encompasses the following critical responsibilities:

- Deployment of the ML model

- Implementation of a robust CI/CD pipeline

- Vigilant monitoring and meticulous version control

It’s important to note that these three phases are intricately interconnected. Decisions made in the initial design phase have a significant impact on the subsequent model development phase and ultimately shape the outcomes in the final phase of ML operations.

Useful Link: What is MLOps? Why MLOps and How to Implement It

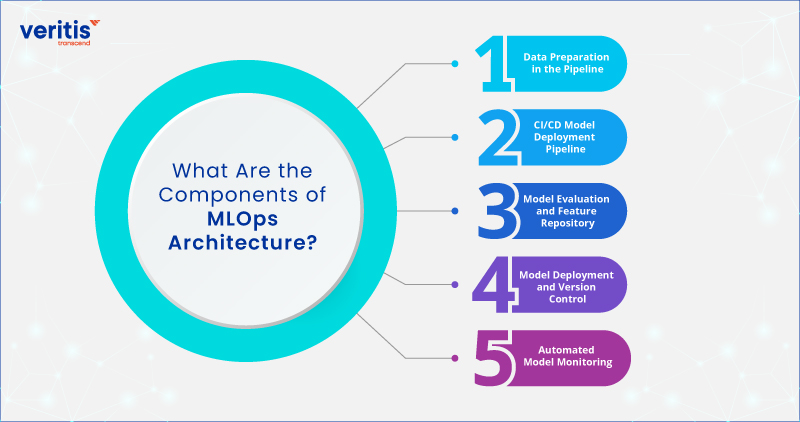

What Are the Components of MLOps Architecture?

The architecture of MLOps, or Machine Learning Operations, is a structured framework designed to facilitate the efficient development, deployment, and management of machine learning models in a production environment. It encompasses a range of essential components that work together to streamline the end-to-end machine learning lifecycle. In this context, we will explore the essential components that form the foundation of a robust MLOps architecture.

1) Data Preparation in the Pipeline

Within the pipeline, data retrieval from storage initiates actions, igniting the data preparation process. This intricate phase encompasses tasks such as data cleansing, validation, and meticulous formatting, effectively rendering the data into a suitable state for training the machine learning model.

2) CI/CD Model Deployment Pipeline

The model code undergoes comprehensive validation, construction, and deployment through a seamless Continuous Integration and Continuous Development (CI/CD) pipeline. This automated pipeline seamlessly incorporates the data that was meticulously prepared in the preceding step, setting in motion the model training process. Extensive automated tests and assessments are carried out to ascertain that the model is well-suited for deployment, with its performance consistently meeting predefined minimum standards.

3) Model Evaluation and Feature Repository

An in-depth model evaluation process is executed to pinpoint the successful, high-performing features. These identified features are then transitioned to a dedicated feature repository, serving as a valuable resource for training future models thereby contributing to ongoing model enhancement and optimization.

4) Model Deployment and Version Control

Once the model has undergone rigorous validation and evaluation, it seamlessly advances to the deployment stage, where it becomes an integral component of the production environment, facilitating real-time inferences. Simultaneously, the new model is meticulously preserved in a version control system. This practice not only enables effective tracking of model versions but also provides the capability to revert to previous iterations should the need arise, ensuring a robust and adaptable MLOps architecture.

5) Automated Model Monitoring

Throughout the model’s operational lifecycle, automated monitoring plays a pivotal role in maintaining operational integrity. It diligently verifies the system’s adherence to expected standards, promptly detects any performance anomalies or drift, and stands ready to facilitate rapid maintenance in the event of any issues, thereby upholding the resilience and effectiveness of the MLOps infrastructure.

Useful Link: Successful Digital Transformation: CEO’s Path to Digital Transformation Success

The Importance of Integrating MLOps Best Practices

Transforming a machine learning model from its conceptualization to deployment and continuous monitoring is an intricate and time-intensive endeavor. Furthermore, as an organization endeavors to expand its data science operations, the complexity of this process increases exponentially. Without MLOps practices and the requisite infrastructure, organizations are compelled to execute the arduous manual tasks of model development, validation, deployment, monitoring, and management.

This undertaking is extensive, necessitating the coordination of multiple teams, engaging various stakeholders, and leveraging diverse skill sets. The inherent challenge lies in that each model is customarily created and deployed distinctly, contingent upon the specific use case, which often leads to inefficiency and bottlenecks.

MLOps establishes a set of uniform procedures powered by advanced technology, which significantly simplifies the stages within the data science lifecycle. Activities that might typically consume several months in the absence of MLOps can be expedited to a few days.

An essential factor lies in the automation of model monitoring, ensuring that models consistently maintain their expected performance or are promptly rectified if issues arise. The outcome is the achievement of heightened precision, resilience, and dependability of models deployed in production.

Integrating MLOps best practices into your organizational processes is imperative for a multitude of compelling reasons:

1) Cost Optimization

Through the automation of workflows, continuous monitoring of resource allocation, and fine-tuning of model training and deployment, MLOps practices play a pivotal role in enabling organizations to curtail the infrastructure and operational expenses linked to their machine learning solutions.

2) Enhanced Model Quality

MLOps methodologies prioritize the principles of continuous integration and continuous deployment (CI/CD), guaranteeing that models undergo ongoing testing and validation procedures before deployment. This meticulous approach serves to elevate the caliber of models and diminish the potential for errors or complications in the production environment.

3) Scalability and Reliability

Adopting MLOps best practices plays a pivotal role in securing the efficient and dependable scalability of ML solutions. This is achieved through optimizing resource allocation, proficient management of dependencies, and vigilant monitoring of system performance. Consequently, the likelihood of encountering bottlenecks, failures, or performance deterioration in production environments is significantly mitigated.

4) Quick Development and Deployment

MLOps solutions simplify the journey from model development through rigorous testing to deployment by automating redundant tasks and fostering seamless cooperation among data scientists, ML engineers, and IT operations units. The end outcome is an accelerated time-to-market for machine learning solutions.

5) Maintenance and Monitoring

MLOps methodologies prioritize the ongoing surveillance of model performance and proactive maintenance to uphold peak functionality. By diligently monitoring aspects such as model drift, data quality, and essential metrics, teams are equipped to detect and rectify potential issues well before they reach a critical stage. This vigilance guarantees that machine learning solutions maintain their accuracy and effectiveness over time.

Useful Link: How to Implement Artificial Intelligence in DevOps Transformation?

MLOps Best Practices

The journey of developing an ML model, from its inception to deployment and continuous monitoring, is undeniably intricate and time-consuming. As the scope and scale of ML projects expand, this complexity grows exponentially.

Additionally, without dedicated ML pipeline infrastructure, organizations are compelled to engage in the labor-intensive manual processes of model development, validation, deployment, monitoring, and management. This multifaceted endeavor necessitates the collaboration of multiple teams with various stakeholders involved. Overall, the manual management of MLOps presents a substantial undertaking that can be significantly streamlined by implementing effective MLOps practices.

Employing technology-backed standard MLOps practices can provide stability to the data science lifecycle. Consequently, tasks that conventionally span several months can be efficiently executed in days, culminating in the production of dependable machine learning models.

1) Automated Dataset Validation

Effective data validation is a cornerstone of building high-quality ML architecture models. By implementing the proper validation techniques and employing suitable training methodologies, the resulting ML models can produce more accurate predictions. Detecting and rectifying errors in datasets is paramount to ensure the sustained performance of the ML model.

Key steps in this process include:

- Identifying and addressing duplicate data entries.

- Managing missing values within the dataset.

- Filtering out outliers and anomalies.

- Eliminating unwanted or irrelevant data fragments.

Dataset validation can become intricate, particularly when handling large datasets from various sources and in diverse formats. To streamline this process and enhance overall ML system performance, the adoption of automated data validation tools proves beneficial. For instance, TensorFlow Data Validation (TFDV) stands as an exemplary tool that empowers developers with automatic schema generation, simplifying data cleansing and anomaly detection, which traditionally required manual intervention.

2) Collaborative Work Environment

Innovation in machine learning thrives within collaborative cultures that promote spontaneous interactions and foster nonlinear workflows. When teams not directly involved in model development struggle to locate state-of-the-art scripts or duplicate colleagues’ efforts, valuable time is wasted. Creating a collaborative workspace ensures that teams remain synchronized with management and stakeholders, fostering transparency around the progress of ML models.

Establishing a centralized hub where all teams converge to share their work, collaborate on model development, and devise solutions for post-deployment model monitoring offers a streamlined approach to ML operations. This environment facilitates the free exchange of ideas, expediting the model development process. For instance, SCOR, one of the world’s largest reinsurers, exemplifies this collaborative approach through its ‘Data Science Center of Excellence,’ which has enabled them to meet customer needs 75% faster than their previous efforts.

3) Application Monitoring

The effectiveness of an ML DevOps model can deteriorate when confronted with error-prone datasets, underscoring the critical need for continuous monitoring of machine learning pipelines during business operations. It is highly advisable to incorporate automated continuous monitoring (CM) tools when releasing an ML model in a production environment. These tools promptly identify performance degradation and facilitate real-time updates, ensuring the sustained quality of datasets. Moreover, they extend their vigilance beyond data quality to encompass operational metrics like latency, downtime, and response time.

Consider the scenario of an e-commerce website conducting a time-bound sale, relying on ML architecture algorithms for user recommendations. In the event of a technical glitch causing the delivery of irrelevant recommendations, the site’s conversion rate plummets, adversely affecting overall business outcomes. These predicaments can be effectively preempted by implementing post-deployment data audits and monitoring tools to uphold the seamless operation of the machine learning pipeline. This proactive approach not only elevates the model’s performance but also diminishes the necessity for manual interventions.

In a tangible example, DoorDash Engineering, a global logistics platform, has adopted a monitoring tool to mitigate the ‘ML model drift,’ a common issue stemming from data changes, showcasing the practical significance of continuous monitoring in real-world applications.

4) Reproducibility

Reproducibility within machine learning entails meticulously preserving every facet of the ML DevOps workflow, ensuring that model artifacts and results are faithfully replicated. These artifacts serve as comprehensive roadmaps, enabling stakeholders to retrace the entire trajectory of ML DevOps model development, akin to how Jupyter Notebook aids software developers in documenting and sharing their code. Interestingly, MLOps often lacks this intrinsic documentation feature.

A pragmatic solution to this challenge involves establishing a centralized repository to compile artifacts at various junctures in the model development process. Reproducibility holds profound importance as it empowers data scientists to showcase how the model achieved its results transparently. It further enables validation teams to recreate identical results, and should another team wish to build upon an existing model rather than starting from scratch, they can seamlessly leverage the central repository. This provision ensures that professional efforts are maximized, reducing redundancy and promoting innovation.

Illustrating this concept in practice, Airbnb’s Bighead serves as an end-to-end machine learning platform, exemplifying the principles of reproducibility and iteration in every ML DevOps model.

5) Experiment Tracking

Developers and data scientists frequently craft multiple models tailored to distinct business use cases. This process typically involves preliminary experiments to select the most promising model for advancement to the production stage. It becomes imperative to maintain a systematic record of scripts, datasets, model architectures, and the outcomes of these experiments, aiding in the decision-making process to identify the model best suited for production.

An illustrative instance of this approach is Domino, a centralized system renowned for its comprehensive tracking of all data science endeavors. Domino’s ‘Enterprise MLOps Platform’ serves as a reservoir for preserving a wealth of data science work-related information, encompassing reproducible and reusable code, artifacts, and outcomes from prior experiments, among other crucial components. Such MLOps platforms play a pivotal role in preserving an organized record of experiments, facilitating the selection of the most viable model for deployment in production environments.

Conclusion

The urge to deploy ML models quickly and efficiently is a driving force behind the development of MLOps. As organizations accelerate their machine learning initiatives, many new models are emerging, fueling the demand for MLOps to facilitate rapid production deployment. While MLOps may still be in its nascent stages, it’s discernible that companies are increasingly gravitating towards a set of guiding principles aimed at enhancing their return on investment in the realm of machine learning.

Veritis, the esteemed winner of the Stevie and Globee Business Awards, proudly offers top-notch MLOps services to complement your journey. With our expertise, companies can confidently navigate the intricate terrain of MLOps, ensuring that your organization’s machine-learning efforts achieve the efficiency and speed required for success. As the demand for MLOps solutions continues to rise, our commitment to delivering top-tier services is poised to empower your organization in realizing the full potential of your machine learning endeavors.

Looking for Support? Schedule A Call

Also Read:

- AIOps Use Cases: How Artificial Intelligence is Reshaping IT Management

- Future of DevOps: Top DevOps Trends in 2023 and Beyond

- AIOPS Solutions: Enhancing DevOps with Intelligent Automation for Optimized IT Operations

- Revolutionizing Software Development: The Power of MLOps

- DevOps outsourcing: Things to Know About Before Getting Started

- Demystifying MLOps vs DevOps: Understanding the Key Differences